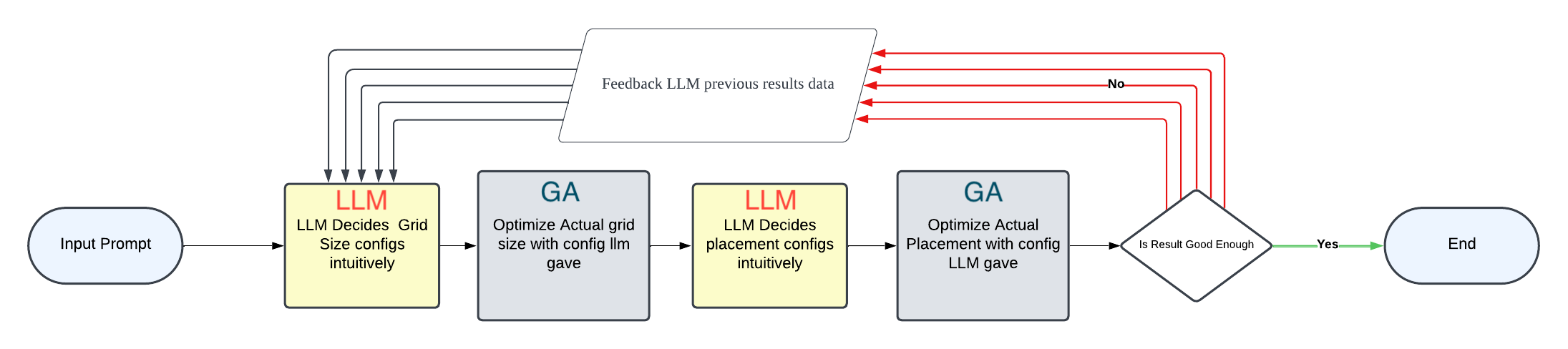

Zone Subdivision With LLM - Expanded Self Feedback Cycle

Introduction

In this post, we explore the use of Large Language Models (LLMs) in a feedback loop to enhance the quality of results through iterative cycles. The goal is to improve the initial intuitive results provided by LLMs by engaging in a cycle of feedback and optimization, extending beyond the internal feedback mechanisms of LLMs.

Concept

-

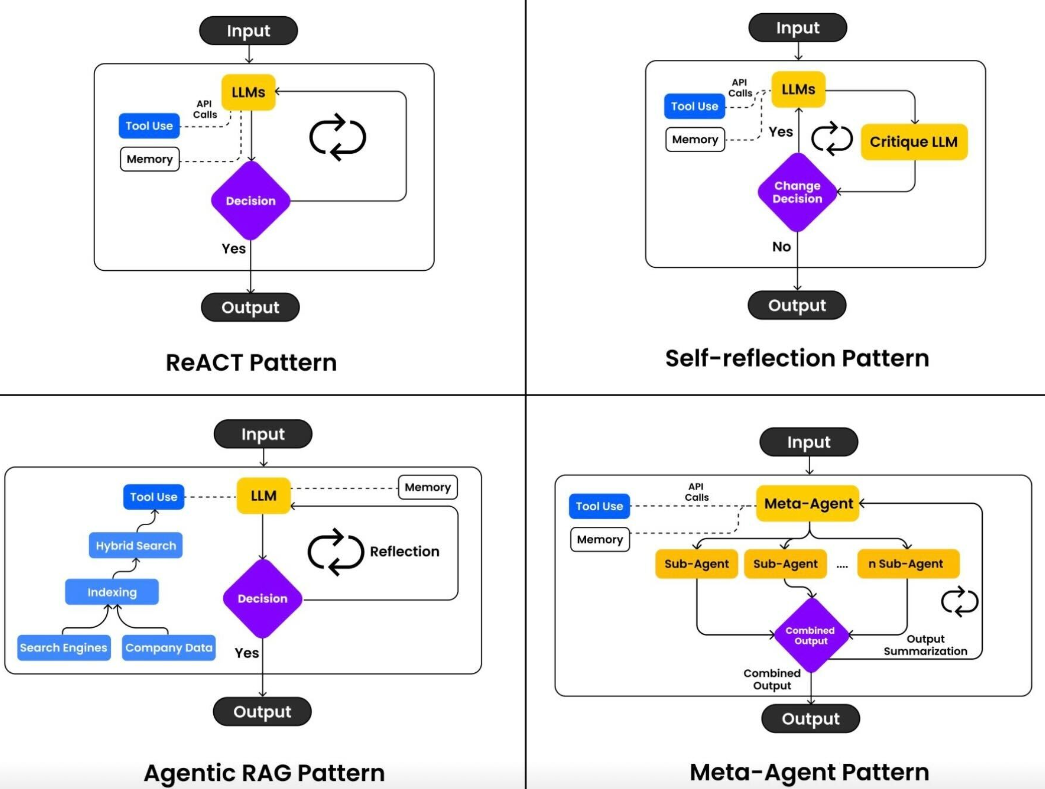

LLMs can improve results through self-feedback, a widely utilized feature. However, relying solely on a single user request for complete results is challenging. By re-requesting and exchanging feedback on initial results, we aim to enhance the quality of responses. This process involves not only internal feedback within the LLM but also feedback in a larger cycle that includes algorithms, continuously improving results.

- Self-feedback outside LLM API In This Work:

- Self-feedback inside LLM API examples:

- Significance of Using LLMs:

- Acts as a bridge between vague user needs and specific algorithmic criteria.

- Understands cycle results and reflects them in subsequent cycles to improve responses.

Premises

- Two Main Components:

- LLM:

- Used for intuitive selection.

- Bridges the gap between the user’s relatively vague intentions and the optimizer’s specific criteria.

- Heuristic Optimizer (GA):

- Used for relatively specific optimization.

- LLM:

- Use Cycles:

- By repeating cycle execution, we aim to create a structure that approaches the intended results independently.

Details

- Parameter Conversion:

- Convert intuitive selections into clear parameter inputs for algorithm input using LLM and structured output.

-

Adjust the number of additional subdivisions based on zone grid and grid size.

number_additional_subdivision_x: int number_additional_subdivision_y: int -

Prioritize placement close to boundaries for each use based on prompts.

place_rest_close_to_boundary: bool place_office_close_to_boundary: bool place_lobby_close_to_boundary: bool place_coworking_close_to_boundary: bool -

Ask about the desired percentage of mixture for different uses in adjacent patches.

percentage_of_mixture: number -

Inquire about the percentage each use should occupy.

office: number coworking: number lobby: number rest: number

- Optimization Based on LLM Responses:

- Use LLM responses as optimization criteria, transforming user thoughts into specific criteria.

- Incorporate Optimization Results into Next Cycle:

- Insert optimization results as references when asking LLMs in the next cycle, reinforcing the significance of multiple [LLM answer - optimize results with the answer] cycles.

- Cycle Structure:

- One Cycle:

- First [LLM answer - optimize results with the answer]:

- Ask LLM about subdivision criteria based on the prompt before optimizing zone usage.

- Second [LLM answer - optimize results with the answer]:

- Request intuitive answers from LLM regarding zone configuration based on the prompt.

- First [LLM answer - optimize results with the answer]:

- After the Second Cycle:

- After the second cycle, directly request improvement in responses by providing the actual optimization results along with the previous LLM responses.

- One Cycle:

Test Results

Case 1

-

Prompt:

In a large space, the office spaces are gathered in the center for a common goal. I want to place the other spaces close to the boundary. -

GIFs:

Case 2

-

Prompt:

The goal is to have teams of about 5 people, working in silos. Therefore, we want mixed spaces where no single use is concentrated. -

GIFs:

Case 3

-

Prompt:

Prioritize placing the office close to the boundary to easily receive sunlight, and place other spaces inside. -

GIFs:

Conclusion

This work aims to improve final results by expanding the scope of Self Feedback beyond LLMs to include LLM requests, optimization, and post-processing. As cycles repeat, results approach the intended outcomes, reducing the potential incompleteness of initial values relied upon by LLMs.